Have the market research groups recently been clamoring at your door? It seems like a weekly request via email or phone call to take "10 minutes" to answer some questions about "the future directions of flow cytometers and associated reagents." I've answered these calls so many times in the past few months that I'm starting to sound like a broken record. My hope is to perhaps just send them this link instead of spending time scoring questions on a scale of 1 - 10 with my stock answer of, "uh, maybe about a 7." So, what I'm attempting to do here is write down some loose specifications of the sort of instrument I'd like to see in the not-so-distant future, and perhaps comment a bit about reagents as we go along.

Lasers: I think the real key here, in terms of the number and wavelength of lasers, is options. If I had an unlimited budget, I think I'd probably put about 8 lasers on my cytometer (UV, Violet, Blue, Green, Yellow, Orange, Red, Far Red) pretty much covering the spectrum. I'd never dream of running all 8 lasers simultaneously, so they'd all need to have the ability to be shuttered on and off. I'm not a huge fan of turning lasers on and off constantly throughout the day, so I'd prefer to have them behind an electronic shutter. It's difficult to imagine purchasing an instrument with fewer than 4 lasers, but perhaps costs may force me to. I'd probably want to run as many as 5 lasers simultaneously, so we're aiming for 5 interrogation points. I'd also really like to have the ability to send lasers to different interrogation points. Most of the time, you'd probably not run UV excitable and Violet excitable dyes at the same time, so they could probably share a pinhole. But, in the instance where you would like to run them simultaneously, you'd probably want to split them to different pinholes and maybe even separate them by a pinhole or two. This would require some re-engineering of the way lasers are delivered to the flow cell, but I have a couple of ideas of how this might work, so it looks plausible. In terms of actual laser wavelength, that's to be determined. I'd need to weigh the merits of a 550nm laser versus a 561nm laser, etc... Regarding power, all I'd add is that I don't want to buy a 100mW laser to get 50mW at the point of interrogation.

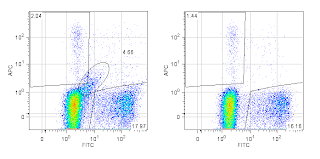

Optics: Spectral overlap among fluorochromes excited off the same laser is to be avoided. So, it really doesn't make any sense to have more than 3 detectors off any one laser line. As you put more and more detectors on a single path, you have no choice but to break the light up into smaller and smaller bits, so by default you'll be compromising on photon collection; squeeze down the PE filter so you can run it, PETexasRed, and FITC all off the 488nm laser - this is absurd. You'd also want to stagger the emission filters so you're not looking at the same light from different paths that happen to excite off multiple lasers (think PerCP off the blue and PECy5.5 off the Green - change this to PECy5 off the green and PerCPCy5.5 off the blue). However, it DOES make sense to be able to detect lots of different fluorochromes off any single laser line. How can this be accomplished? Through quick change filters. For example, let's say we have 3 detectors off the Blue laser (SSC, FITC, PerCP, for example). I'd like to use that FITC detector for FITC, CFSE, GFP, mVenus, Aldefluor, Sytox Green, etc... Most people will have a 530/30 filter on their 'FITC' channel, but this may not be optimal for all the different 'green' fluors you may use. So, one option would be to use a wider band pass on that channel, say a 525/50. This is fine until I need to turn on my Green laser for excitation of some fluorescent proteins like mBanana. In this case I'd want to change my GFP filter to something like a 510/20, but then change it back when I'm not doing fluorescent protein work. Ideally, I'd like to tell the software which color combinations I'm using and have it adjust all the filters necessary to optimize fluorescence collection and minimize spectral overlap, but in the meantime, I want a system that has the ability to easily change filters, know which filter in in which detector, and have a place to store filters not currently being used so they don't get all scratched up and full of dust.

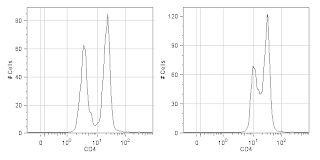

Electronics: I use to scoff at those who said they needed 5 and 6+ logs on their cytometer, but I'm coming around a little bit. I could easily see my next cytometer having at least 5 logs of dynamic range, but only if it has the right electronic components to fill those 5-6 decades. See this post for some ideas regarding that -

Putting an End to the Log Wars. There's really no reason why our instruments should not have really fast processors that can do fine detail pulse processing. We're 11 years into the 21st century, yet we're using stuff developed in the 1980's. A great optical system is nothing without an equally great Electronics system.

Fluidics: In my eyes, Hydrodynamic focusing is still king (See edit below for clarification on how acoustic focusing is implemented specifically on the Attune

Acoustic focusing focuses the cells, but not the sample fluid so you end up picking up fluorescence from unbound fluors in the illumination volume - this is the same issue with capillary systems), whether it's in a small chip or in a flow cell, however the sheath velocity going through the sensing area could be sped up to allow for higher event rates without increasing the size of the sample core stream. This, of course would require better electronics with much higher resolution to sample the short pulses and really good collection optics to collect as high a percent of emitted photons as possible, not just the small fraction that just happen to emit at 90 degrees to the incident light. I'd also caution against the desire to make super complicated fluidics systems that tend to break constantly (I'm looking at you

FACSAria I and FACSCanto-A).

Software: Flow Cytometers are built by engineers, and it's usually the case that they find the engineer who knows most about writing software and say, "Let's get some software written to run this thing." There's usually not much usability testing, UI design thought, etc... The last batch of cytometers I've looked at have had a bit more polish on their software, so things look like they're headed in the right direction. The trend to borrow from MS Office and use ribbons all over the place is probably a safe bet. You'd have to assume Microsoft has done a bunch of usability testing, and if it's good enough for them, it's probably good enough for us. However, we're not word processing or making tables or even making presentations, we're adjusting hardware components using software tools, collecting data, and displaying that data on screen. So, in reality, we should be using a model that the everyday Jane Q. Researcher would be familiar with that performs a similar task. I'll throw out a couple of examples. I love the OSD (On Screen Display) on my Samsung LCD television. It allows me to easily get in, adjust settings like Color mode, Brightness, and Sound and get out all while not completely obstructing my view of the picture behind. Just change out Color mode, Brightness and Sound with Parameters, Voltage, and Compensation and switch picture with plots, and there you go. If you're a Photog, you probably use software like Aperture or Lightroom. These software tools allow for some pretty specific settings and adjustments but in a clean, easy-to-use interface. So, let's use these types of software to model our cytometer software after instead of a word processing software.

Reagents: I want lots of antibody choices, which is only going to be possible from a company that has ties to Research Institutions that make new antibodies and are willing to license them to companies for profit (eh-hem, our

Monoclonal Antibody Facility has done and continues to do this on a regular basis). I also want them coupled to a wide range of fluors, especially the new ones like the brilliant violet. I want to be able to try before I commit, whether this be via a free sample, or a really inexpensive small aliquot. I'm not at all concerned about having reagents tied to my equipment, and I actually dislike that trend. I'm not going to buy a cytometer because some company made a canned "apoptosis kit" that works specifically for their instrument only to find out it's using Annexin V FITC and PI. The 5 questions I ask when finding an antibody. Do they have the antibody? Is it coupled to a range of fluors that work for my cytometer configuration? Does it fit in my budget? Are there multiple size options? Is there support information so I know it's going to work?

So, there you have it. How much am I willing to spend on this instrument? Well, I'm willing to buy as much instrument as I need. If I want a 2-laser, 6-color instrument, I think it has to be priced around $100K. If I want an 8-laser, 15-FL detector (5 pinholes x 3 detectors) with all the filters I need to look at 45 distinct fluorochromes, I'd say it'd have to be around $350K.

Edit: A comment above about acoustic focusing may be only partially correct. Although it is true that acoustic focusing is responsible for focusing the cells and not the sample core stream, this is not how it has been implemented in the Life Technologies Attune Cytometer. In fact, the Attune has both acoustic focusing for the cells and hydrodynamic focusing for the sample core stream. When utilizing the low flow rate on the Attune (25ul/min), you can achieve a significant amount of core stream tapering due to a narrowing of the entire stream from a cross-sectional area of 340um (where the cells are being focused by the acoustic wave form) to a 200um cuvette (where laser interrogation occurs). In this case, the constriction of the entire stream provides the hydrodynamic force needed to narrow the core stream. When running the sample at a higher flow rate, you'd increase the size of the core stream proportionally as is what happens on non-acoustically focused systems. However, in the case of the Attune, even at this higher volume flow rate, the cells still remain focused leading to better and more uniform illumination by the lasers.